Coming off stage, I’ve often wondered to myself whether the audience would have had a better show if I’d pressed play on a particularly good studio take, and bobbed my head to my email inbox instead. My thing is a kind of improvised film, but anybody who has used a laptop on stage must have felt something similar at some point. My instinct is to make that laptop a better tool, or turn the interface into something legible for the audience. As a designer I’d need a way of reasoning about the situation… and not being able to reason about live events was the thread that kept on pulling.

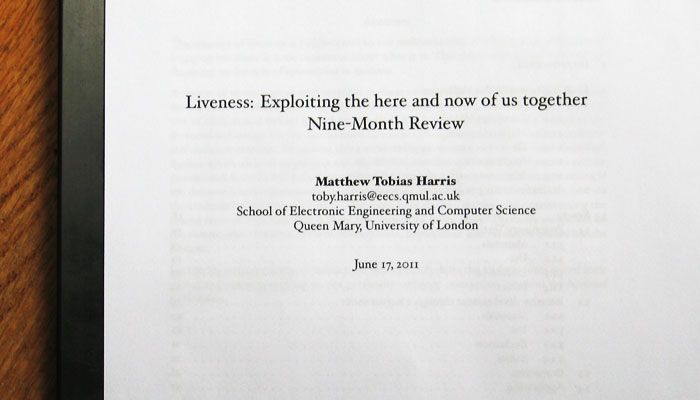

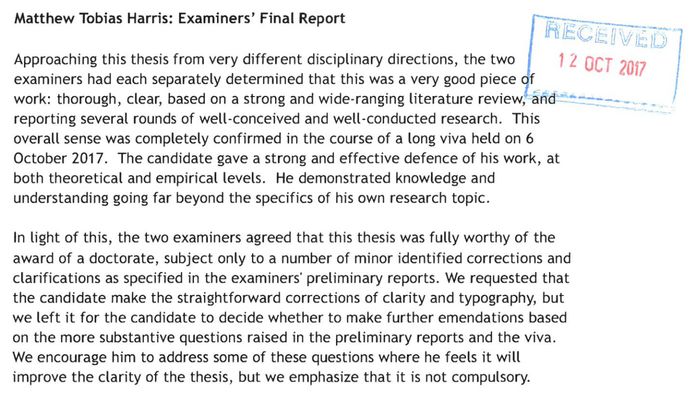

That thread led to a place on the Media and Arts Technology programme at Queen Mary University of London, and some years later, a PhD dissertation. The examiners judged it “a very good piece of work: thorough, clear, based on strong and wide-ranging literature review, and reporting several rounds of well-conceived and well-conducted research”. toby*spark? dr*spark!

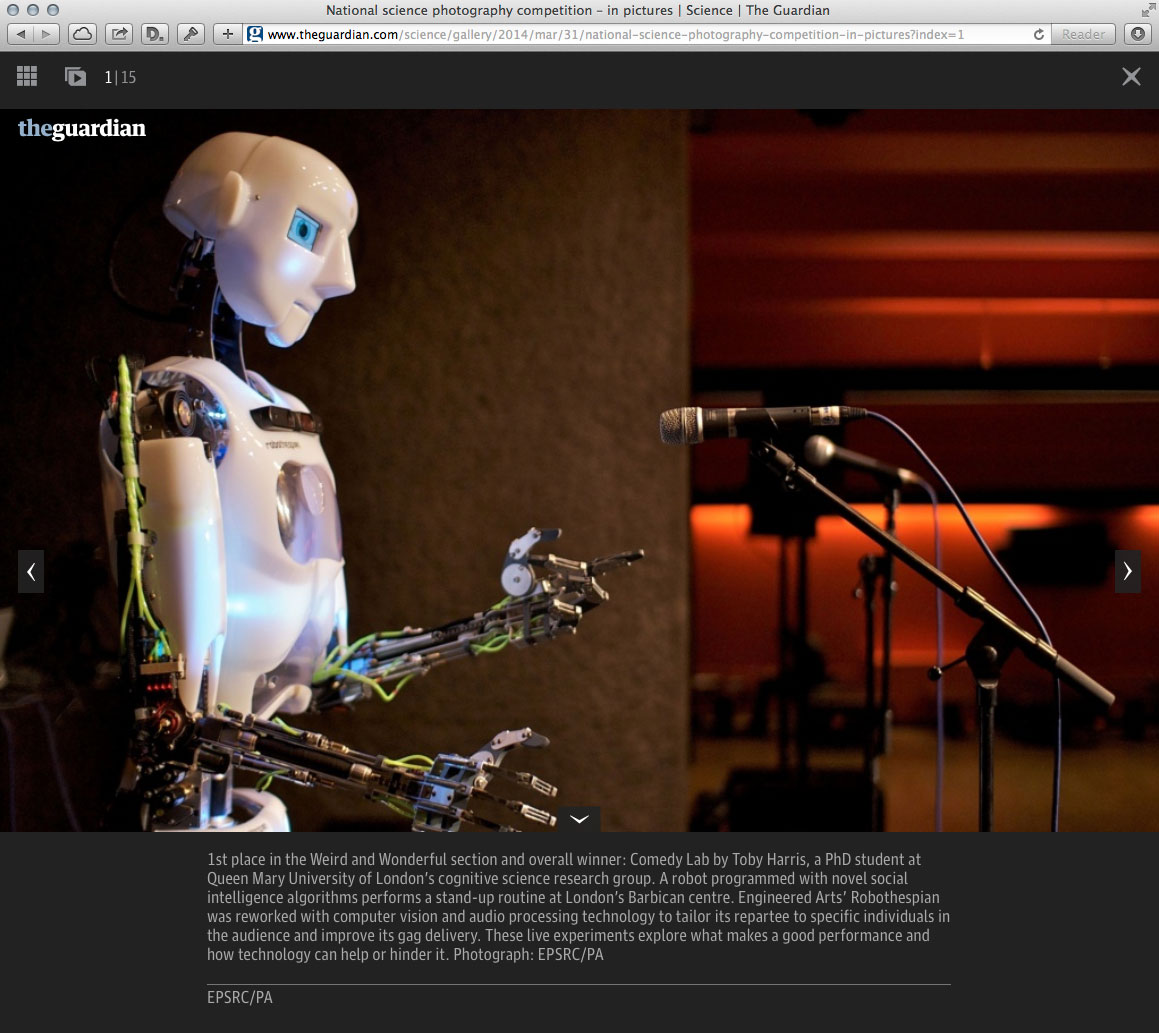

You can get a quick hit here, where my work got into national newspapers. The most appropriate introduction is probably this conference talk, where I get to play a little with everybody being there live, and sell my epistemic position: audiences and interaction, empirically.

But the real thing, well it goes something like this…

Dissertation Outline

Music in concert, sport in a stadium, comedy in a club, drama in a theatre. These are live events. And there is something to being there live. Something that transcends the performance genre. Something that is about being there at an event, in the moment: caught in the din of the crowd, or suddenly aware you could hear a pin drop. This something is the topic of this dissertation.

The research works to transform the vernacular live into operational processes and phenomena. The goal is to produce an account of ‘being there live’ by describing how features common to live events shape the experiences had in them. This will necessarily pare away much of the richness of these genres of performance, as it will examples of individual events. What is sought is a foundational understanding, upon which such richness can be better considered.

The thesis is that an interactional analysis provides the most simple, clearly expressed and easily understood account of the liveness of live events. This is drawn from empirical investigation of the factors that contribute to the sense of being there live. In particular, it questions whether there are general patterns of interaction that can be used to generalise across live events.

1. On the liveness of live events

First, the notion of the liveness of live events is set out. Chapter one uses existing literature to focus the research on human interaction. It does this by developing the examples of music in concert, comedy in a club and drama in a theatre and relating these to theoretical accounts of performance and liveness. Chapter one concludes with the need to establish a more perspicuous account of liveness than found in the literature.

2. Examining an event

The accounts of live events switch from written sources to direct observation in chapter two: a stand-up comedy event is described and analysed. The idea of performance as an interactional achievement is supported, most simply by the performer stating the act is going to be a dialogue with the audience, but more pervasively in the elaboration of some of the work identified in chapter one to this new data. The study of mass-interaction is limited however, and this chapter ultimately shows the need for a better understanding of what to look for in human interaction, and a different approach to data collection.

3. Audiences and interaction

The observational study of stand-up comedy demonstrated a gap in theory, method and instrumentation for the study of mass-interaction in live events. Chapter three addresses this gap by reviewing literature on human interaction. Applying the concerns of this literature in the context of live events leads to consideration of mass spectatorship. Is there a distinction between people who are merely massed together and audiences? This chapter argues that there is and that it consists in the specific kinds of social organisation involved.

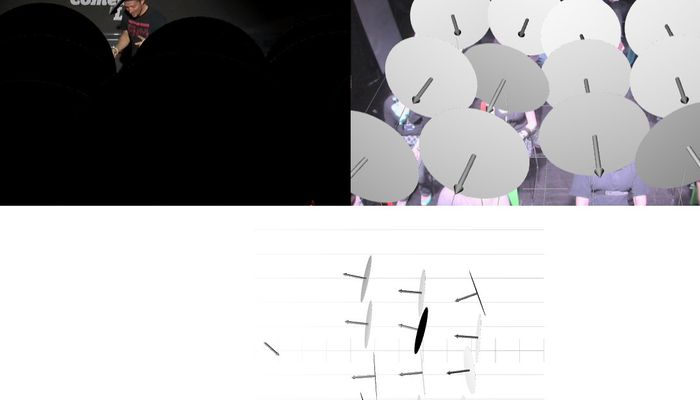

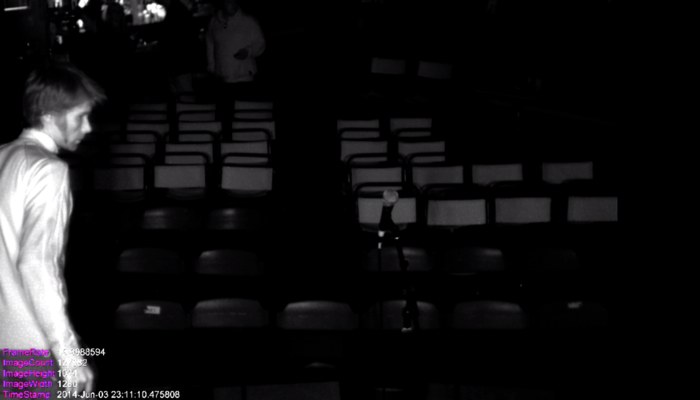

4. Experimenting with performance

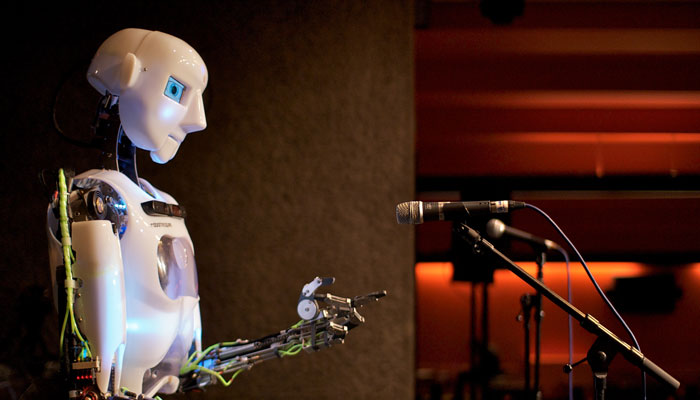

The literature reviewed in chapter three also motivates an experimental programme. Chapter four presents the first, establishing Comedy Lab. A live performance experiment is staged that tests audience responses to a robot performer’s gaze and gesture. This chapter provides the first direct evidence of individual performer–audience dynamics within an audience, and establishes the viability of live performance experiments.

(See also: tobyz.net project page/)

5. Experimenting with audiences, part one

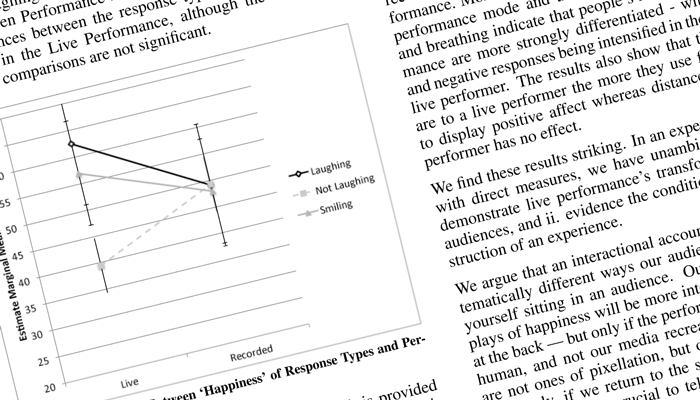

The two main Comedy Lab experiments are presented in chapter five and six. Having successfully gained evidence of a social effect of co-presence in the first experiment, these two test the social effects of co-presence to the fullest extent practicable. This requires an expansion of the instrumentation, which opens chapter five. The basic premise of the experiment that follows is to have the performer as either an interacting party or not, and see what performer–audience and audience–audience dynamics are identifiable. The experiment contrasts live and recorded performance, directly addressing a topic that animates so much of the debate identified in chapter one. The data provide good evidence for social dynamics within the audience, but little evidence for performer–audience interaction. This emphasises that both conditions are live events, as even though the recorded condition is ostensibly not live, a live audience is present regardless and it is this that matters. Overall, the results affirm that events are socially structured situations with heterogeneous audiences.

6. Experimenting with audiences, part two

The second main Comedy Lab experiment is presented in chapter six. The manipulation is now of the audience. The basic premise is to vary the exposure of individuals within the audience. The experiment contrasts being lit and being in the dark, when all around are lit or not. The data provide strong evidence for social dynamics within the audience, and limited evidence for performer–audience dynamics. Spotlighting individuals reduces their responses, while everyone being lit increases their responses: it is the effect of being picked out not being lit \emph{per se} that matters. The results affirm that live events are social-spatial environments with heterogeneous audiences.

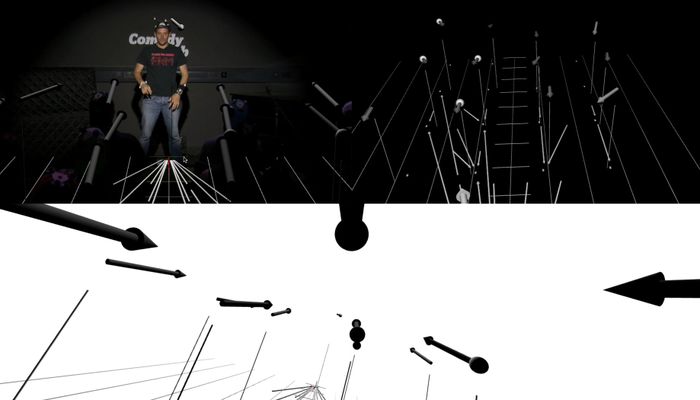

7. Visualising performer–audience dynamics

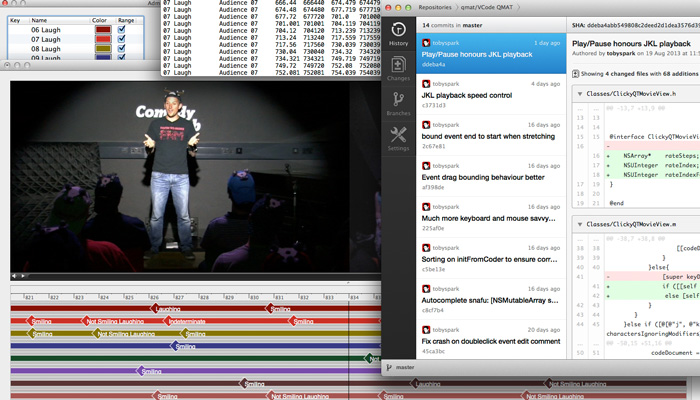

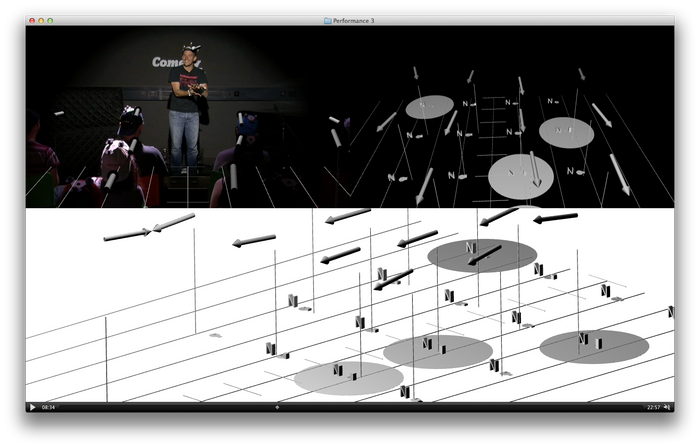

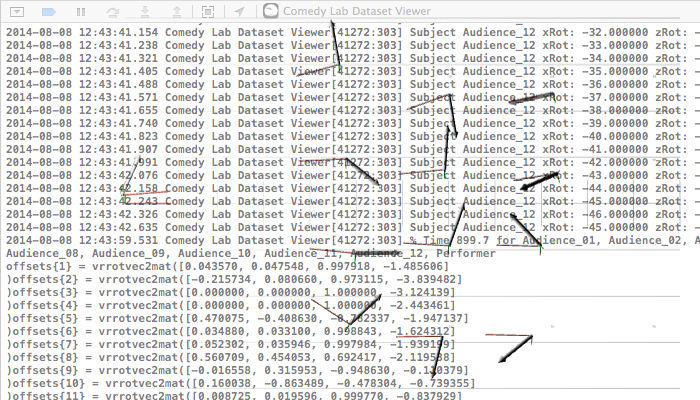

In pursuing Comedy Lab, challenges of capturing the behaviour of performers and audiences were repeatedly addressed. Beyond the issues of instrumentation already discussed, the data sources were diverse, and their combination and interpretation required original work throughout. Building on this work, a further contribution of method is made in chapter seven. A method to facilitate inductive analyses of performer–audience dynamics is presented, along with the actual dataset visualiser tool developed. In the same way that video serves the study of face-to-face dialogue, augmented video and interactive visualisation can serve the study of live audiences.

(See also: tobyz.net project page/)

8. Liveness: an interactional account

The opening chapter set out the thesis that an interactional analysis should provide the simplest, most perspicuous account of the liveness of live events. In the chapters leading to the final chapter, eight, an empirical understanding of the interactional dynamics of particular live events has been put forward. This is now synthesised into an interactional account of liveness.

First, the Comedy Lab results are discussed as a response to the apparent paradox set up earlier in the dissertation. The programmatic hypothesis is that across live events, generalised patterns of mass interaction should be identifiable. However the interactional mechanisms that are well understood are dyadic and are found in everyday contexts. At first sight, live events – massed! an escape from the everyday! – would seem to be neither.

Following this, the interactional account of liveness is described. The concept of social topography is introduced and the nature of experience considered. It is argued that the experiments provide evidence that the kinds of experience-shaping conversations had after an event – “did you enjoy it?” – are happening, pervasively, during the event. With different interactional resources, they cannot be the complex verbal constructions of dialogue outright, but nonetheless they are there: moments of interaction that can change the whole trajectory of an experience. The interactional understanding of liveness put forward is then used to variously underpin, and undermine, some ideas of liveness encountered in the literature.

The exposition is completed with a consideration of how this account can provide a systematic basis for design. It argues that people have been long been alive to the issue of liveness and that technological interventions in particular can be powerful ways of reconfiguring experiences unique to live events. Further, as the dynamics of the interactions amongst audience members have been shown key to the experience of a live event, if practitioners attend to this directly new opportunities for intervention will open up.

Finally, the investigation of unfocussed interactions is discussed as future work, with specific challenges and risks informed by the Comedy Lab analysis. And it is noted that in measuring what is going between audience members, in making sense of those measures, in doing this with a much finer grain than anyone else has considered, and relating all this to experience… that this shows the need for a different orientation from performance studies, cognitive psychology, or even audience studies.

Document and dataset

- Dissertation - https://qmro.qmul.ac.uk/xmlui/handle/123456789/30624

- Dataset - https://github.com/tobyspark/ComedyLab

- Dataset Visualiser - https://github.com/tobyspark/Comedy-Lab-Dataset-Viewer