having just overhauled the ‘about the live in live cinema’ presentation for the IMAP seminar, i thought it should be quite straightforward to translate this into a 4,000 word essay – my penance for sitting in on a module from QMUL’s excellent drama department last semester. how wrong could i be, the structure to my argument turned out quite differently. all the better for it, however.

Three silhouettes, bodies poised above glowing buttons; a piercing light scanning light beams across the void of the image. ‘Rhythms + Visions: Expanded + Live’ says the text. Flipping the flyer over, the venue as School of Cinematic Arts, University of South California lends an air of authority, and finally in the body text a definition: ‘a live-cinema event’.

I was there — in fact, I am one of the silhouettes on the flyer — and the ‘live-cinema event’ shall frame the following discourse on liveness, media-based performance, and how the role of performance in a true live cinema needs to be rethought.

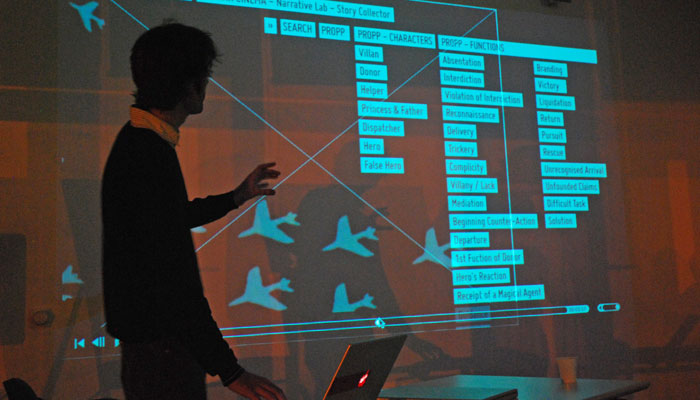

Walking into the School of Cinematic Arts, there was no being led into the dark of a cinema theatre, rather this would be an exploration through the outdoor spaces of the complex. Moving image works were aligned onto the architecture, and scrims echoed projections in space. Finally, a stage area. The first act starting: Scott Pagano accompanied by four musicians. For the unconventional setting thus far, this is a setup all will recognise: there is what could be termed a cinema-grade screen with performers in front, and rows of seating laid out beyond.

The musicians are playing, seemingly consumed by their instruments and keeping in check with each other. Pagano is standing, the only one twisted around to face to the screen rather than audience. In his hands, an iPad upon which — and with — he is furiously gesturing. On the screen an abstract composition unfolding, organic forms built out with photographic elements, a triumph of aesthetic. The music is instrumental and amplified, without naming a genre it’s accessible to the Los Angeles audience: guitars, keyboards, percussion. The audience seem receptive; there is a pleasing fidelity and sheen to the work.

But what here is live? But what here is cinema? These are the questions in my mind as I watch, and to which we will return.

Next a performance from the collective of which I am part, but not a piece with my direct involvement; I am still in the audience. Endless Cities by D-Fuse. It is a film in the Ruttman and Vertov tradition, a montage of urban scenes from around the world, and is accompanied by a live score: musique concrète performed from laptop with percussion accompaniment. Again it seems accessible, and in the photographic detail there is much to latch onto and be absorbed by.

It’s Live Cinema in the sense that I first heard the term: a musical accompaniment to a silent film. A montage from the kino-eye, it’s easier here to answer ‘what here is cinema’ than the Pegano piece. But I still wonder, what is really live here, and why bother?

The final performance is in many ways my creation, and so here I cannot report from the audience, but can offer my view as a performer. Which is one of immense frustration. Starting out, I am in a good position: we have an expanded staging that breaks the imagery out of the single frame, a developed aesthetic that abstracts footage in sync to the music, and the impressive shot bank of Endless Cities to pull from. It’s less the dérive and more the impressionism of a late night taxi ride. And we’ve performed it really well before. But that is precisely what is killing me by the end of this particular performance. We have performed it better before, so wouldn’t a recording of that performance have served us better? It’s a recognition that performance in this context translates entirely to the audio-visual output, for our actual performance is opaque to the audience, operating somewhere between obscure symbols on an obscured screen and twitching trackpad fingers. At which point, rather than taking the best performance so far to play back, I ask myself why not just create a master version in the studio and be done with it?

This is the terrain from which I argue. My motivation is not to categorise art or debate concepts, but to get to the heart of what a true live cinema could be.

The essay needs a revision – given the time constraints its really just a first draft – for which 'about the live in live cinema’s next outing should provide, whether that is website, journal, seminar or lecture.