first challenge for the drawing interactions prototype app is to get ‘hands-on with time’. what does that mean? well, clearly controlling playback is key when analysing video data. but that also frames the problem in an unhelpful way, where playback is what’s desired. rather the analyst’s task is really to see actions-through-time.

pragmatically, when i read or hear discussions of video data analysis, working frame-by-frame comes up time and time again, along with repeatedly studying the same tiny snippets. but if i think of the ‘gold standard’ of controlling video playback – the jog-shuttle controllers of older analogue equipment, or the ‘J-K-L’ of modern editing software – they don’t really address those needs.

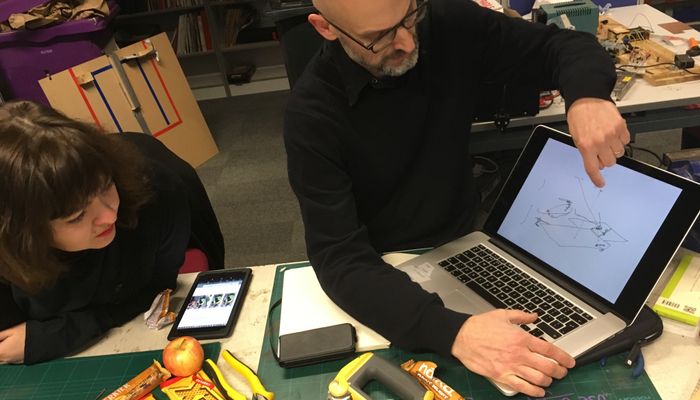

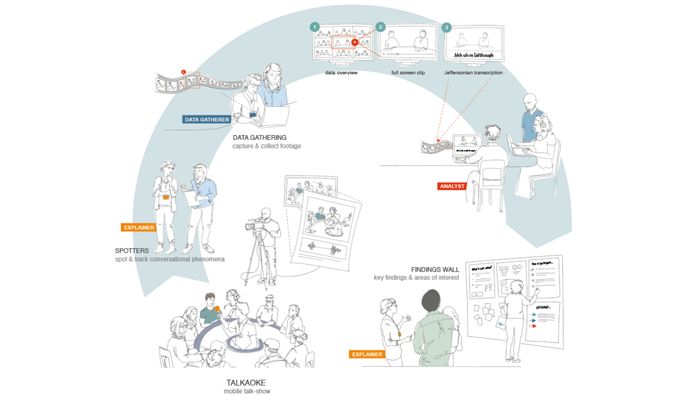

so what might? i’ve been thinking about the combination of scrolling filmstrips and touch interfaces for years, promising direct manipulation of video. also, in that documentary the filmstrips are not just user-interface elements for the composer, but displays for the audience. such an interface might get an analyst ‘hands on with time’, and might better serve a watching audience. this is no small point, as the analysis is often done in groups, during ‘data sessions’. others would be able to tap the timeline for control – rather than one person owning the mouse – and all present would have an easy understanding of the flow of time as the app is used.

of course, maybe this is all post-hoc rationalisation. i’ve been wanting to code this kind of filmstrip control up for years, and now i have.

a little postscript: that panasonic jog-shuttle controller was amazing. the physical control had all the right haptics, but there was also something about the physical constraints of the tape playback system. you never lost track of where you were, as the tape came to a stop, and starting speeding back. time had momentum. so should this.