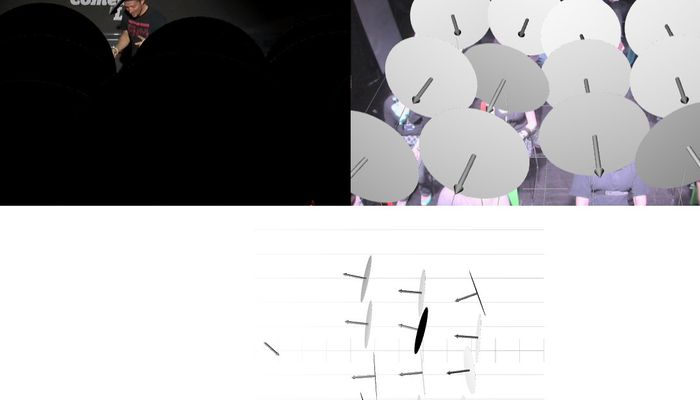

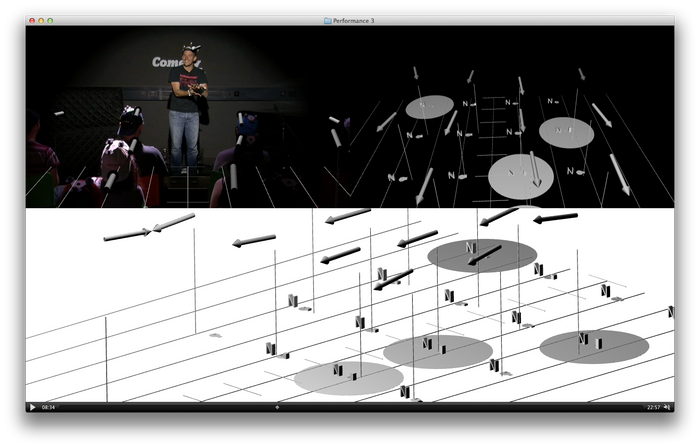

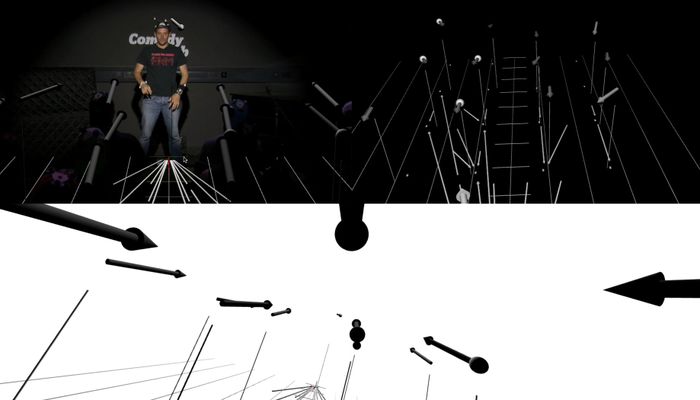

the head pose arrows look like they’re pointing in the right direction… right? well, of course, it’s not that simple.

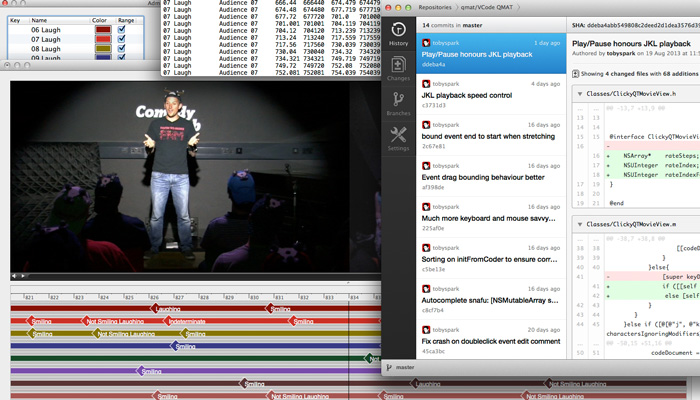

the dataset processing script vicon exporter applies an offset to the raw angle-axis fixture pose, to account for the hat not being straight. the quick and dirty way to get these offsets is to say at a certain time everybody is looking directly forward. that might have been ok if i’d thought to make it part of the experiment procedure, but i didn’t, and even if i had i’ve got my doubts. but we have a visualiser! it is interactive! it can be hacked to nudge things around!

except that the visualiser just points an arrow at a gaze vector, and that’s doesn’t give you a definitive orientation to nudge around. this opens up a can of worms where everything that could have thwarted it working, did.

“The interpretation of a rotation matrix can be subject to many ambiguities.”

http://en.wikipedia.org/wiki/Rotation_matrix#Ambiguities

hard-won code –

DATASET VISUALISER

// Now write MATLAB code to console which will generate correct offsets from this viewer's modelling with SceneKit

for (NSUInteger i = 0; i < [self.subjectNodes count]; ++i)

{

// Vicon Exporter calculates gaze vector as

// gaze = [1 0 0] * rm * subjectOffsets{subjectIndex};

// rm = Rotation matrix from World to Mocap = Rwm

// subjectOffsets = rotation matrix from Mocap to Offset (ie Gaze) = Rmo

// In this viewer, we model a hierarchy of

// Origin Node -> Audience Node -> Mocap Node -> Offset Node, rendered as axes.

// The Mocap node is rotated with Rmw (ie. rm') to comply with reality.

// Aha. This is because in this viewer we are rotating the coordinate space not a point as per exporter

// By manually rotating the offset node so it's axes register with the head pose in video, we should be able to export a rotation matrix

// We need to get Rmo as rotation of point

// Rmo as rotation of point = Rom as rotation of coordinate space

// In this viewer, we have

// Note i. these are rotations of coordinate space

// Note ii. we're doing this by taking 3x3 rotation matrix out of 4x4 translation matrix

// [mocapNode worldTransform] = Rwm

// [offsetNode transform] = Rmo

// [offsetNode worldTransform] = Rwo

// We want Rom as rotation of coordinate space

// Therefore Offset = Rom = Rmo' = [offsetNode transform]'

// CATransform3D is however transposed from rotation matrix in MATLAB.

// Therefore Offset = [offsetNode transform]

SCNNode* node = self.subjectNodes[i][@"node"];

SCNNode* mocapNode = [node childNodeWithName:@"mocap" recursively:YES];

SCNNode* offsetNode = [mocapNode childNodeWithName:@"axes" recursively:YES];

// mocapNode has rotation animation applied to it. Use presentation node to get rendered position.

mocapNode = [mocapNode presentationNode];

CATransform3D Rom = [offsetNode transform];

printf("offsets{%lu} = [%f, %f, %f; %f, %f, %f; %f, %f, %f];\n",

(unsigned long)i+1,

Rom.m11, Rom.m12, Rom.m13,

Rom.m21, Rom.m22, Rom.m23,

Rom.m31, Rom.m32, Rom.m33

);

// BUT! For this to actually work, this requires Vicon Exporter to be

// [1 0 0] * subjectOffsets{subjectIndex} * rm;

// note matrix multiplication order

// Isn't 3D maths fun.

// "The interpretation of a rotation matrix can be subject to many ambiguities."

// http://en.wikipedia.org/wiki/Rotation_matrix#Ambiguities

}

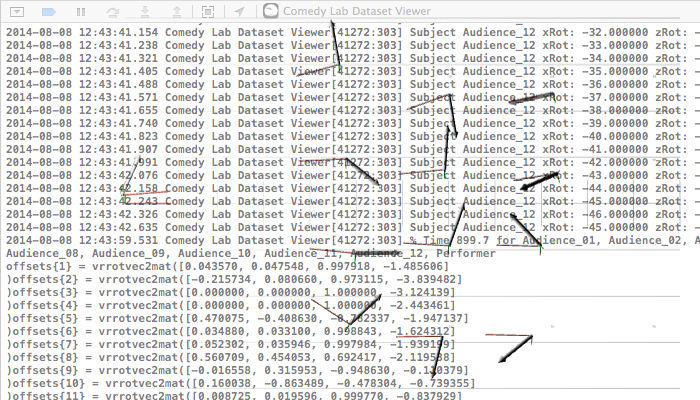

VICON EXPORTER

poseData = [];

for frame=1:stopAt

poseline = [frameToTime(frame, dataStartTime, dataSampleRate)];

frameData = reshape(data(frame,:), entriesPerSubject, []);

for subjectIndex = 1:subjectCount

%% POSITION

position = frameData(4:6,subjectIndex)';

%% ORIENTATION

% Vicon V-File uses axis-angle represented in three datum, the axis is the xyz vector and the angle is the magnitude of the vector

% [x y z, |xyz| ]

ax = frameData(1:3,:);

ax = [ax; sqrt(sum(ax'.^2,2))'];

rotation = ax(:,subjectIndex)';

%% ORIENTATION CORRECTED FOR OFF-AXIS ORIENTATION OF MARKER STRUCTURE

rm = vrrotvec2mat(rotation);

%% if generating offsets via calcOffset then use this

% rotation = vrrotmat2vec(rm * offsets{subjectIndex});

% gazeDirection = subjectForwards{subjectIndex} * rm * offsets{subjectIndex};

%% if generating offsets via Comedy Lab Dataset Viewer then use this

% rotation = vrrotmat2vec(offsets{subjectIndex} * rm); %actually, don't do this as it creates some axis-angle with imaginary components.

gazeDirection = [1 0 0] * offsets{subjectIndex} * rm;

poseline = [poseline position rotation gazeDirection];

end

poseData = [poseData; poseline];

end