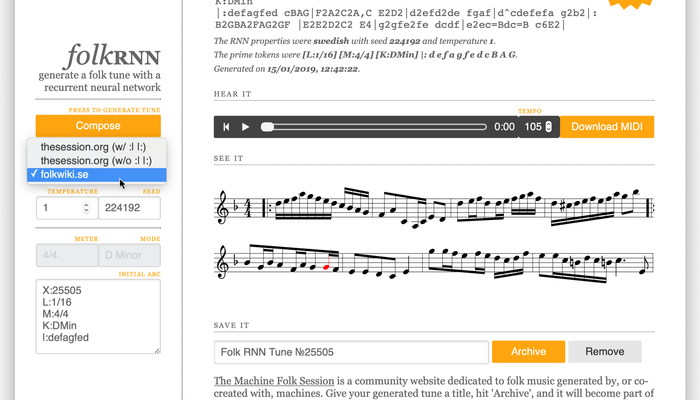

folkrnn.org can now generate tunes in a swedish folk idiom. bob having moved to KTH in sweden, had got some new students to create a folkrnn model trained on a corpus of swedish folk tunes. and herein lies a tale of how things are never as simple as they seem.

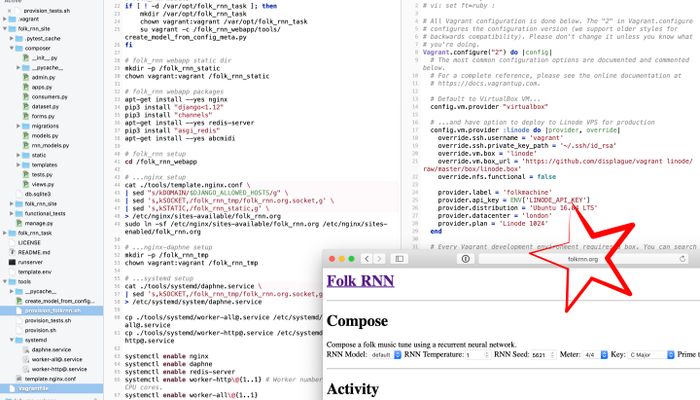

the tale goes something like this: here we have a model that already works with the command-line version of folkrnn. and the webapp folkrnn.org parses models and automatically configures itself. a simple drop-in, right?

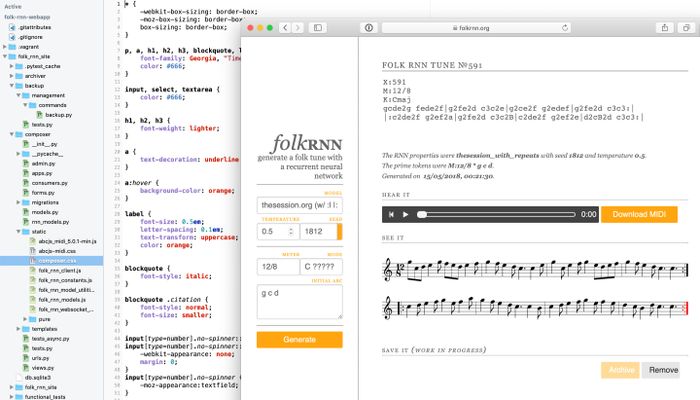

first of all, this model is sufficiently different that the defaults for the meter, key and tempo are no longer appropriate. so a per-model defaults system was needed.

then, those meter, key composition parameters are differently formatted in this corpus, which pretty much broke everything. piecemeal hacks weren’t cutting it, so a sane system was needed that standardised on one format and sanely bridged to the raw tokens of each model.

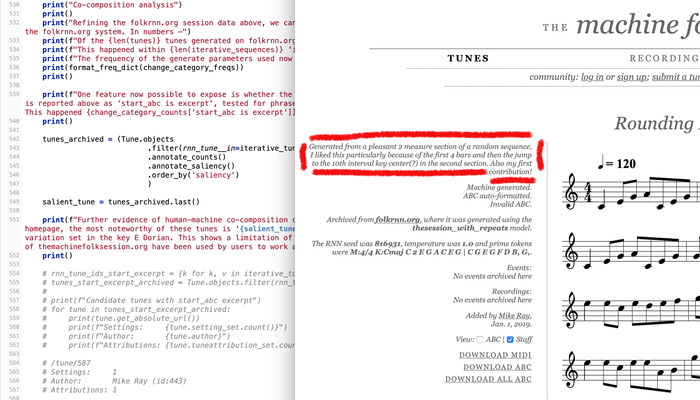

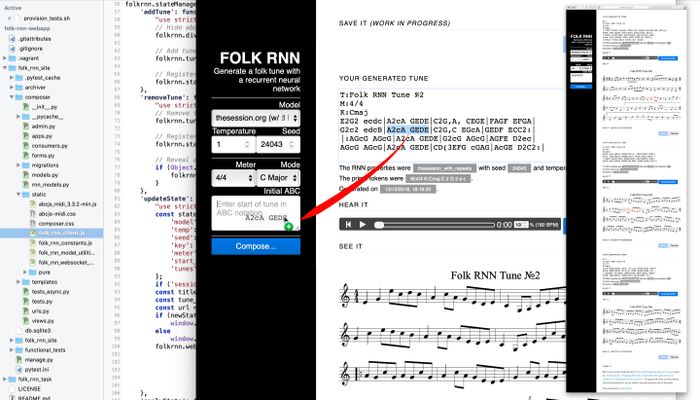

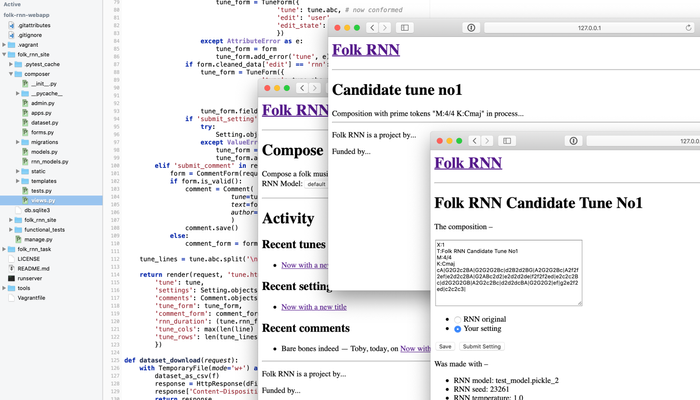

after the satisfaction of seeing it working, bob noticed that the generated tunes were of poor quality. when a user of folkrnn.org generates a tune with a previous model, setting the meter and key to the first two tokens is exactly what the model expects, and it can then fill in the rest drawing from the countless examples of that combination found in the corpus. but with this new model, or rather the corpus it was trained on, a new parameter precedes these two. so the mechanics that kicks off each tune needed to now cope with an extra, optional term.

so expose this value in the composition panel? that seems undesirable, as this parameter is effectively a technical option subsumed by the musical choice of meter. and manually choosing it doesn’t guarantee you’re choosing a combination found in the corpus, so the generated tunes are still mostly of poor quality.

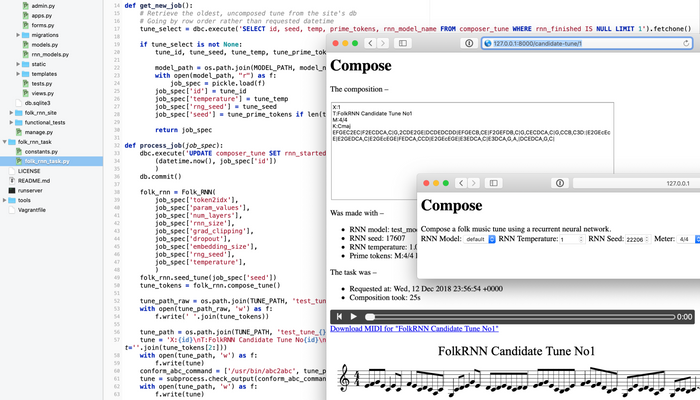

at this point, one might think that exactly what RNNs do is choose appropriate values. but it’s not that simple, as the RNN encounters this preceeding value first, before the meter value set by the user. it can choose an appropriate meter from the unit-note-length, but not the other way round. so a thousand runs of the RNN and a resulting frequency table later, folkrnn.org is now wired to generate an appropriate pairing akin to the RNN running backwards. those thousand runs also showed that only a subset of the meters and keys found in the corpus are used to start the tune, so now the compose panel only shows those, which makes for a much less daunting drop-down, and fewer misses for generated tune quality.

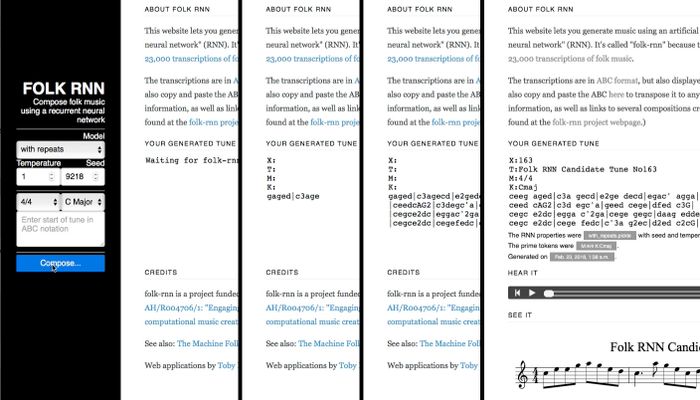

unit-note-length is now effectively a hidden variable, which does the right thing… providing you don’t want to iteratively refine a composition, as it may vary from tune generated to tune generated. rather than exposing the parameter after all, and then have to implement pinning in as per the seed parameter’s control, a better idea was had: make the initial ABC field also handle this header part of the tune. so rather than just copying-and-pasting-in snippets of a tune, you could paste in the tune from the start, including this unit note length header. this is neat because as well as providing the advanced feature of being able to specify the unit note lenth value, it makes the UI work better for naïve users: why couldn’t you copy and paste in a whole tune before?

as per the theme there, implementing this wasn’t just a neat few lines of back-end python, as now the interface code that is loaded into the browser needs to be able to parse out and verify these header lines, and so on.