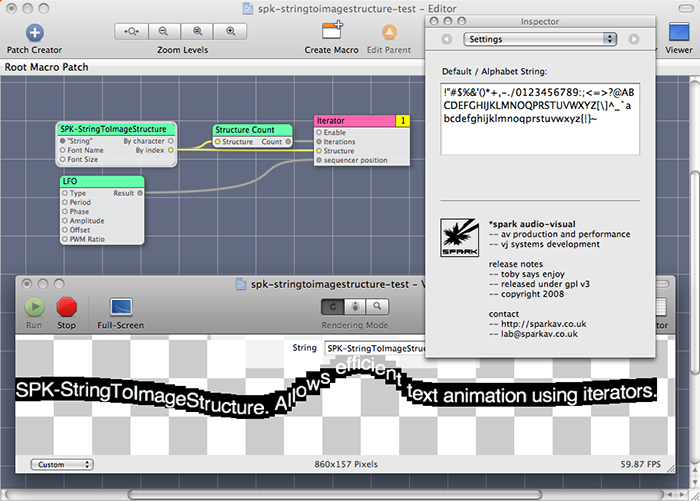

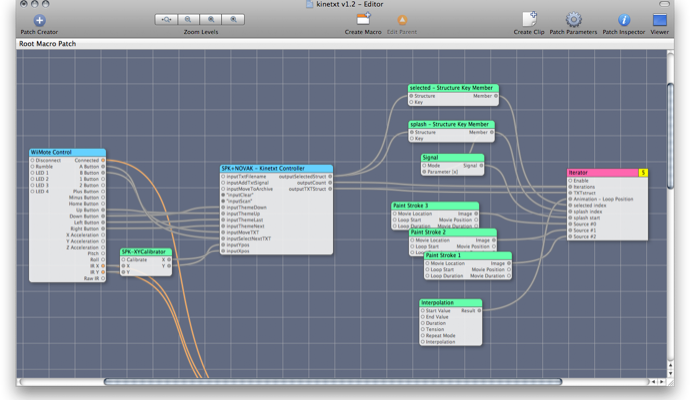

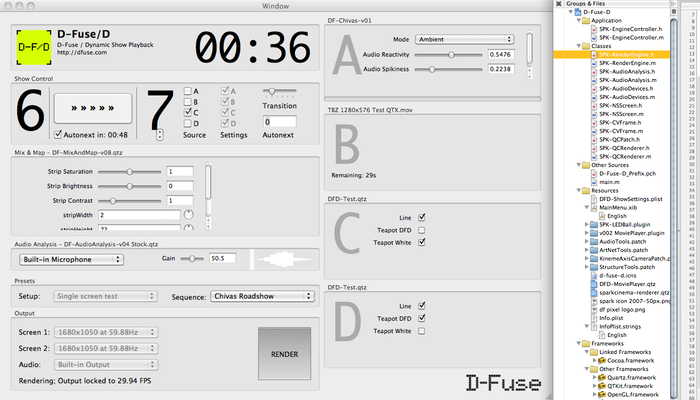

there is now a mac pro in china running DFD, something that has been consuming my time for a while now. the roadshow d-fuse have been developing is our first big foray into automated dynamic content, lighting and audience interaction, and so without us being there for every gig holding it down with a hacked-up vj setup we needed something that you could just power on and the show would start. and so d-fuse/dynamic was hatched, a quicktime and quartz composer sequencer which reads in presets and its input/output functionality from a folder we can remotely update, and essentially just presents a “next” button to the on-site crew.

what i think is particularly novel about DFD is it was designed to output a consistent framerate, rendering slightly ahead of time so the fluctuations in QC and QT frame rendering are buffered out. i’m not sure is the effort/reward of this was worth it, but it will be an interesting code base to come back to and re-evaluate.

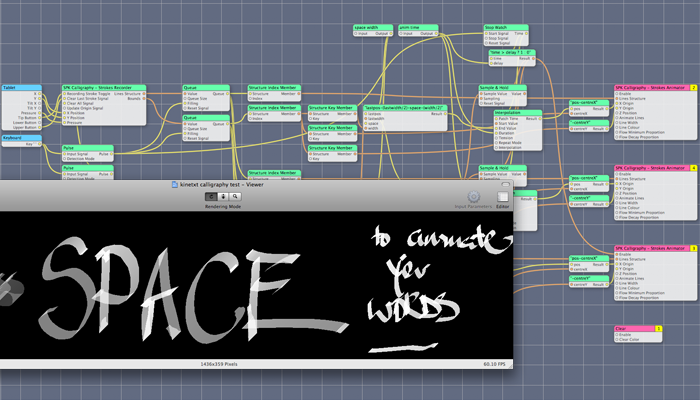

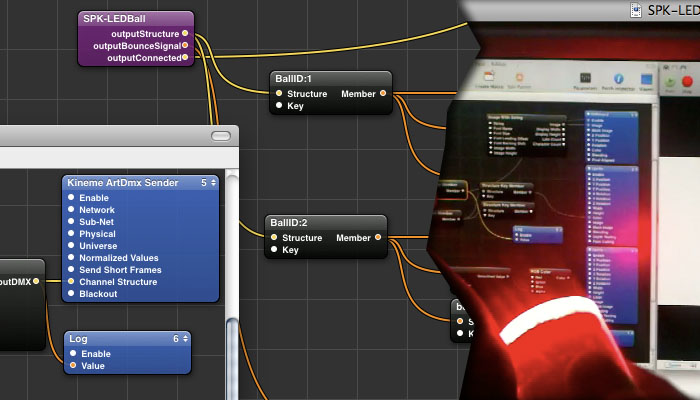

for the roadshow, it is playing out any number of four sources at 1280x576, including the generative, controlled by iPads in the audience and LED balls on stage, audio-reactive core of the show, sending the central 1024x576 to the main screen, driving 10 LED 72x1px strips from the remaining 576x128px on either side, and sending DMX back out to the stage lighting and LED balls.

big thanks to vade, luma beamerz, and memo for helping me one way or the other grok anti-aliased framebuffer rendering.

having spent much time i didn’t have trying to get 64bit QTX giving me openGL frames at QuickTime 7 efficiencies, life saving thanks also to vade and tom for v002 movie player 2.0, for which there is patch back with them giving it the ability to play the QT audio to a specific output.

lastly, a perennial thanks to kineme, couldn’t have gone this direction without knowing their DMX, Axis Camera, and audio patches were out there.

i’m not sure what to do with the code at the moment. it was made as a generic platform, but its current state is still very much tied to that specific project. or rather, the inevitable last minute hacking as it hit china needs to be straightened out. it has been made and funded as a tool for d-fuse to build on, so that needs to be taken into account too. in short, if anybody has a concrete need for such a thing, get in touch and we’ll see what could be done.