A gaming workshop for Studio Digital. Ten hours, ten teenagers. Go!

I developed and led a workshop on digital creativity. The brief was gaming. I wanted some kind of physical computing spectacle to expand everyone’s idea of what ‘Studio Digital’ could mean.

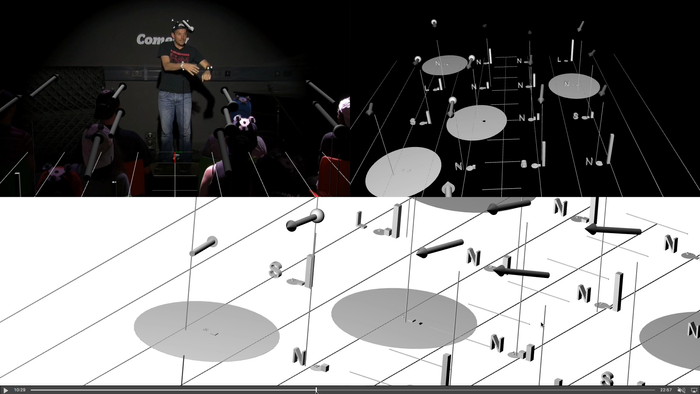

And so: Cardboard Wipeout, an immersive racing game that riffs off the seminal (to me) futuristic video game wipE’out”. We bought two super-cheap radio-control cars, asked the town for all their post-christmas cardboard, and over two sessions improvised our way to what you can see in the video.

In doing so, the participants:

- Adapted a video game to a new medium

- Created an immersive, interactive experience

- Worked through marking-out and fitting-up a three-dimensional figure-of-eight track out of flat cardboard sheets

- Made vinyl graphics to brand the track and space

- Learnt how a micro:bit controller can animate special LED strips

- Learnt how micro:bit controllers can talk to each other and ‘run’ a game

- Experimented whether first-person video could work for driving the cars

- An’ stuff…

Most of all, I think the workshop provided two insights:

- The world is malleable. If all you’ve ever known is passive consumption of media, games, etc., it’s quite a leap to realise you don’t have to accept things as they are: you can break “warranty void if broken” seals and make your own culture.

- You can make something that looks amazing… even if it’s mega-scrappy when you turn the lights back on. So: you got there, you’re as good as anyone else actually is, now go iterate and make it truly amazing.

Thanks to Jon, Sophie, Naomi and everyone at Contains Art and their Studio Digital programme.

Implementation

The game needs

- A micro:bit controller to control the game logic, using the A button to start countdown, and B button to manually record a race finish. This micro:bit can also used for finish-line sensor and a/v controller (see below).

- A micro:bit controller per strip of individually-addressable LEDs (WS2812B, aka NeoPixel). I used 2x 5m, 150 LED strips.

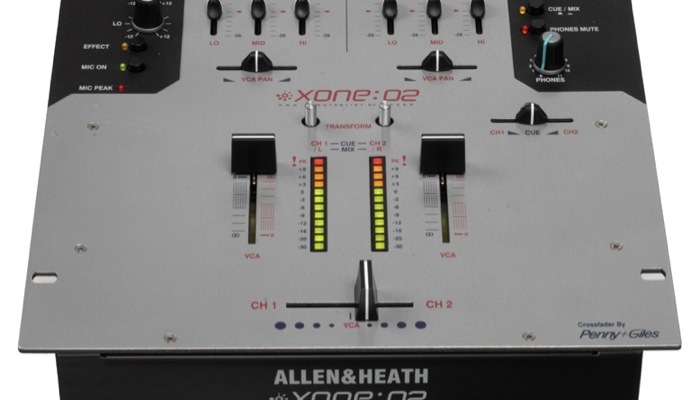

- A radio-control car and track.

- Cardboard saws. Yes, they’re a thing: MakeDo, Canary.

Bonuses

- A micro:bit controller to message audio-visual kit capable of running music, countdowns, lap timers etc., e.g. a PC listening to a serial port over the micro:bit’s USB connection. In the repo linked below, there is a Mac OS X Quartz Composer patch that will do this.

- A micro:bit in each car can run the game-logic, and opens up much game-play and a/v potential. See this diary post.

- A micro:bit with sensor to detect cars passing the finish-line. I had a break-beam sensor to do this, it didn’t seen too reliable and then a wire broke during the workshop.

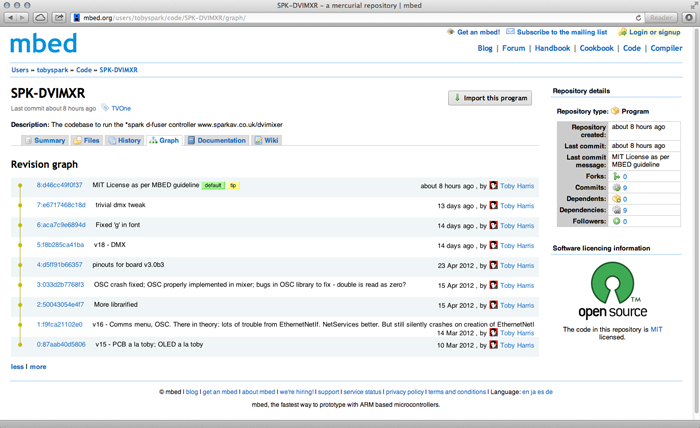

Code

Notes

This is the first time I used micro:bit controller boards. They’re great. Really practical feature set – that 5x5 LED grid and peer-to-peer radio in particular – backed by a wealth of teaching materials and stand-alone projects. Drag-and-drop blocks in a webpage for those new to programming, and python and the mu editor for those needing something more. And they’re cheap.

Two things caught me out. There is no breakout-board for the micro:bit that interfaces 3.3v and 5v. NeoPixel-like LED strips for instance need 5v, so a NeoPixel controller micro:bit needs both a power regulator in and a line driver back out. I hacked these onto a bread:bit breakout board, but to be robust and repeatable this really should be built into a PCB like that. (The controller board I made for Tekton is that on steriods, for the Raspberry Pi; it’s thanks to making those that I had the line driver chips lying around/).

The other thing is that sometimes the race would finish straight after starting, or after the finish state pass straight through waiting-for-player into countdown. The problem is the radio module has a queue of incoming messages, which meant stale or even dropped messages. So I wrote a only-care-about-the-latest wrapper class: radiolatest.py on GitHub