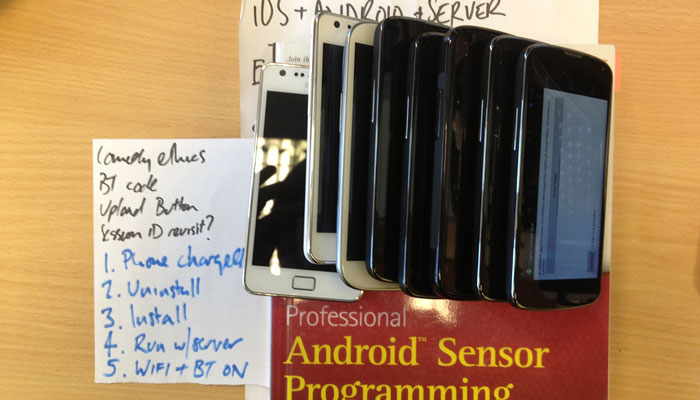

the interactive-map-and-then-some app turned out to be a step too far for the organisation we hoped to make it their own, but there still was a festival and a need to determine just what smartphone sensors could tell you about the activity around a festival. and so another app was born, one to harvest any and all sensor data for real-time or subsequent analysis. the interaction, media and communication group i’m part of now being rebranded cognitive science, here is the cogsci crowd app, as it stands.

https://github.com/qmat/IMC-Crowd-App-Android

https://github.com/qmat/IMC-Crowd-Server

the UI presents a ‘crowd node’ toggle button, which corresponds to the app running a data logger and making a connection to a server conterpart. it’s called ‘crowd node’ because we hope this to be the beginning of a network of devices word amongst the crowd, from which crowd dynamics can be analysed in realtime, and interventions staged. being on android, this crowd node is a service running in the foreground, which means the app can come and go while the service runs. it maintains a notification, and while this is there, the phone won’t sleep until it powers down. the datalogger registers for updates from all the sensors available on that device, and constantly scans for wifi base stations and bluetooth devices. getting some kind of audio fingerprint should be a useful future addition to the sensing. the server connection mints session IDs that keep things anonymous while tracking instances of the app, and receives the 1000 line json formatted log files either in bulk afterwards or as they’re written. in time, this should be a streaming connection for realtime use, with eg. activity analysis and flocking algorithms running on the incoming data.